Working at Theorem: a typical day

I’ve joined CitrusbyteTheorem 9 years ago, and since day 1 it was a fully remote experience. Over the years I’ve learned lots about how to organize myself to approach each new workday although never gave much thought to it, until a few days ago, when while interviewing a candidate he asked what does a typical day at Theorem looks like. This post will try to address that question.

First and foremost, a disclaimer: by no means I speak on behalf of Theorem or the rest of my teammates; these are entirely my own experiences and do not reflect the reality of all the great people working at this company.

I live with my girlfriend and our two lovely kids (4 years old and 1 year and a half), so keep that in mind while reading this post.

As you are aware, at the time of writing this post we are living in very strange times, throughout a global pandemic that has most of the world in quarantine with people confined to their places and working remotely. As stated earlier, at Theorem we are 100% remote since the beginning, so the COVID-19 pandemic didn’t change much on how we work, although it had some effects.

Pre-pandemic typical workday

I have an office 20 blocks away from home, and my kids went to kindergarten 4 blocks away from the office, so days started at 6:15 am to enjoy breakfast with the family, then we would drive the kids to school, drop them and head to the office. My workdays usually started, then, at 8:00 am.

Office

The very first thing I usually do any given day is going through my emails and any pending notification from the day before. If something requires my attention immediately I answer right at it, otherwise, I either archive or snooze the message to a later time if required.

Next is checking the status of any ongoing task I have been working previously, and paving the way for what’s next. Then, off to work.

Around noon either myself or my girlfriend goes to pick up the kids from school, and takes them home to have lunch, and then back to the office. If I’m the one picking them, I do that during my lunch break, and then have a quick bite or snack. Otherwise, I cook something for myself or order some delivery. During this break I might read or watch something.

Then the rest of the day goes on, until 4:00 pm or 5:00 pm, depending on the day, and walk back home. And that concludes a typical workday.

Typical workday during the pandemic

Things have changed, clearly. We don’t wake up anymore at 6:15 am, now it’s usually at around 8:00 am. Breakfast is served, and I use this time to catch up on some news and go through my emails and notifications, again snoozing for an hour or so whatever needs my attention; the rest is archived.

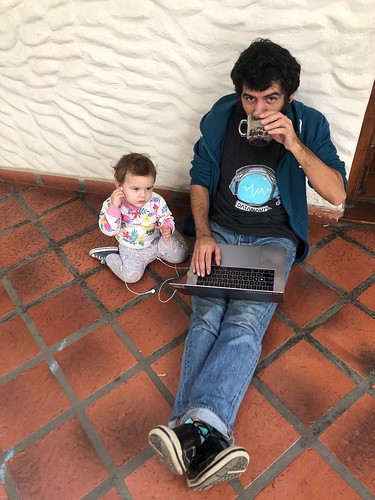

I’ve set up a standing desk in my bedroom, which is right next to the living room, where the kids spend most of their time playing. The biggest change since the pandemic was that nowadays I don’t have long stretches of work time anymore, so I try and split my tasks into smaller time schedules, so I can take a look at my kids, play with them or help them with homework (yes, even my 1.5 years old daughter has Zoom meetings now.)

Working from home with kids

Lunch and dinner are usually planned the night before, so at around noon either me or my girlfriend starts preparing lunch. The kids love this time as they get to watch something on Netflix. I had to cancel all my meetings during this time, but that doesn’t seem to have affected my work. Asynchronous communication works great!

After lunch work continues, and I might be able to get some work-only hours if the kids decide to nap, otherwise, again it’s split into smaller chunks of time. Either way, I’m still able to drive my commitments to success.

Conclusions

As you can see, not much has changed, other than how many hours in stretch I can work without interruptions. All other work details were already in place given that we are a 100% remote company. The biggest takeaway, for me, is that in order to survive this crazy new world we are living in, you need to work with great people, who understand not all experiences are equal, who trust you will work with professionalism and responsibility, and who you trust back in the same way.

If you like what you read and you would like to form part of this great team, check our careers page and apply to any of our current openings. Who knows? Perhaps your dream job is just waiting for you.